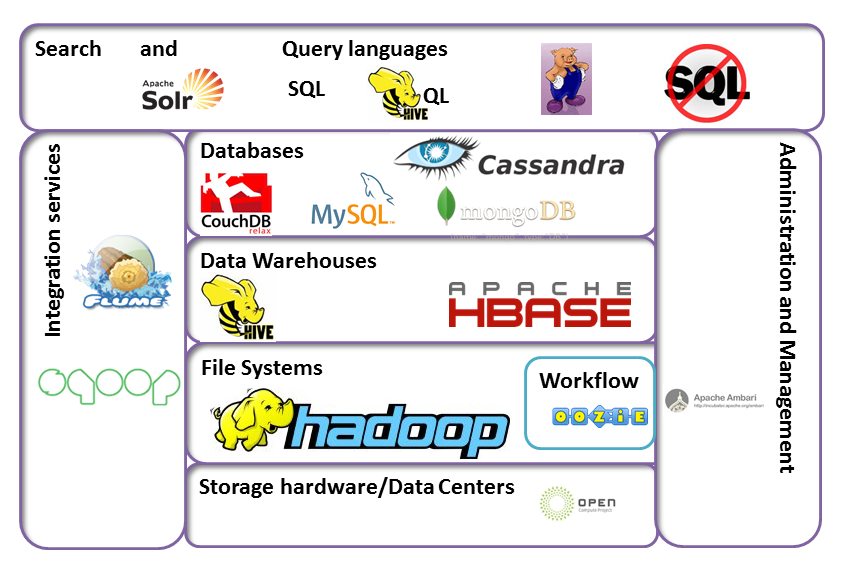

Big Data is one of the most buzzword in the recent years. The technology growth is currently ruling the word and it’s happened to be the key to handle huge data sets.

I got so attracted to these technologies and making me so crazy. The following list would be reference of the technologies I came across.

-

HDFS is implemented to handle large set of data. Inaugural design is brought with the inspiration of the Google File System (GFS). Apache Hadoop, framework is build for distributed processing. On HDFS to execute ELT (Extract, Load and Transform) jobs it uses MapReduce and YARN.

-

MapReduce introduced by Google. They were internally implementing ETL jobs on huge data set and they published a Paper that started it all. After google’s paper Amazon’s came up with their Hadoop instance of MapReduce is called Elastic MapReduce (EMR).

-

Apache Spark is emerging technology that vastly used by Big Data developers and Data Scientists. Spark deals the data with the concept of distributed data set, which is know as Resilient Distributed Dataset (RDDs). It includes not only MapReduce but also Spark SQL for SQL and structured data processing, MLlib for machine learning (ML), GraphX for graph processing, and Shark Streaming.

-

Mahout is very popular in the midst of Data Scientist. It bundles with collection list of machine learning libraries to analyze huge data set on Hadoop - https://mahout.apache.org/

-

Lucene is mostly known as component to search indexing server. Because Lucene core has been used to develop the indexing search engine server. Lucene is bundle with NLP features that allows to indexing the linguistic data. Solr is one of the most popular search engine-indexing servers, which uses Lucene Core. ElasticSearch is also similar to Solr and it’s believed that ElasticSearch is much faster and pruned to work efficiently in any business domain.

-

Apache Pig is a platform for analyzing huge data set on hadoop. It’s a high level language, which is easy to use without a prior programming knowledge - https://pig.apache.org/

-

Hive is SQL like data analyzing framework for big data on Hadoop. This language is called HiveQL.

-

Apache Oozie is a workflow scheduler to keep the track on ELT jobs/MapReduce Jobs - http://oozie.apache.org/

-

Azkaban is also similar to Oozie. It’s a batch workflow scheduler. Its developed by linkedIn - https://azkaban.github.io/

-

Apache Sqoop is a tool to transfer data from Hadoop to structured data stores - http://sqoop.apache.org/

-

Hue is a web application interface for Hadoop - http://gethue.com/

-

Apache HBase is a column-oriented distributed data store. The cell is where HBase stores data as a value. A cell is identified by its [rowkey, column family, column qualifier] coordinate within a table. Its design and develop is inspired by google’s BigTable

The above lists are incomplete and always will be in the IT world. In future I’ll try to discuss more in detail about each of these technologies.