Apache Spark is big data powerful communication component to analyzing and data manipulations.

In my previous (Installing PySpark - SPARK) blog we discussed about to build and successfully run PySpark shell. But for development the PySpark module should be able to access from our familiar editor.

Initially I tried with PyCharm Preference setting and added the PySpark module as an external library (Figure 1). But the editor couldn’t resolve the reference (Figure 2). Hence, ended up with adding the reference during the Python script runtime.

Go through the following below code and add the appropriate SPARK HOME directory and PYSARK folder to successfully import the Apache Spark modules.

import os

import sys

# Path for spark source folder

os.environ['SPARK_HOME']="your_spark_home_folder"

# Append pyspark to Python Path

sys.path.append("your_pyspark_folder ")

try:

from pyspark import SparkContext

from pyspark import SparkConf

print ("Successfully imported Spark Modules")

except ImportError as e:

print ("Can not import Spark Modules", e)

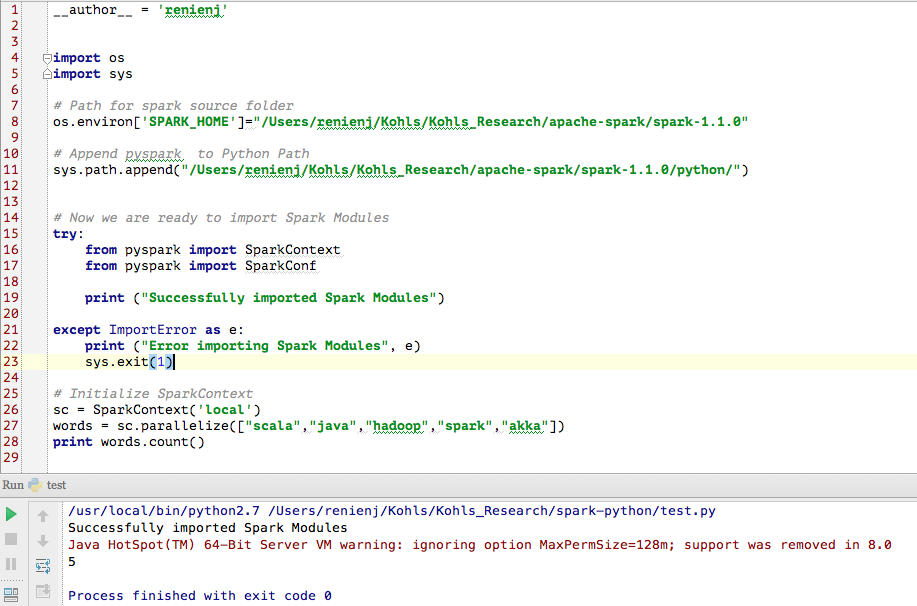

sys.exit(1)After executing the code it should print “Successfully imported Spark Modules” (Figure 3).

Now lets write a small spark task to check whether PySpark works. Add the following code after importing PySpark module and run the code (Figure 4).

# Initialize SparkContext

sc = SparkContext('local')

words = sc.parallelize(["scala","java","hadoop","spark","akka"])

print words.count()